When I created this lib, love.data.pack/unpack did not exist yet.

Some benefits:

- It supports more data types than pack/unpack. BlowWriter/Reader can serialize tables and 64 bit integers, both trough dedicated read/write functions and the pack/unpack functions. LÖVE's pack/unpack can't handle tables or integers > 2^52.

- Pack/unpack is limited to the format string syntax, which can become tedious and a serious limitation, depending on your use case. This library can be used like file I/O, and provides read/write functions for each supported data type, while also having support for the pack/unpack syntax.

- BlobWriter uses an efficient storage scheme for strings and (optional) 32 bit integers. It has a special size-optimized 32 bits integer type (vs32/vu32) that is internally used to encode string lengths.

- Pack/unpack uses native data sizes, making it harder to use in cross-platform use cases, while Blob.lua uses fixed data sizes.

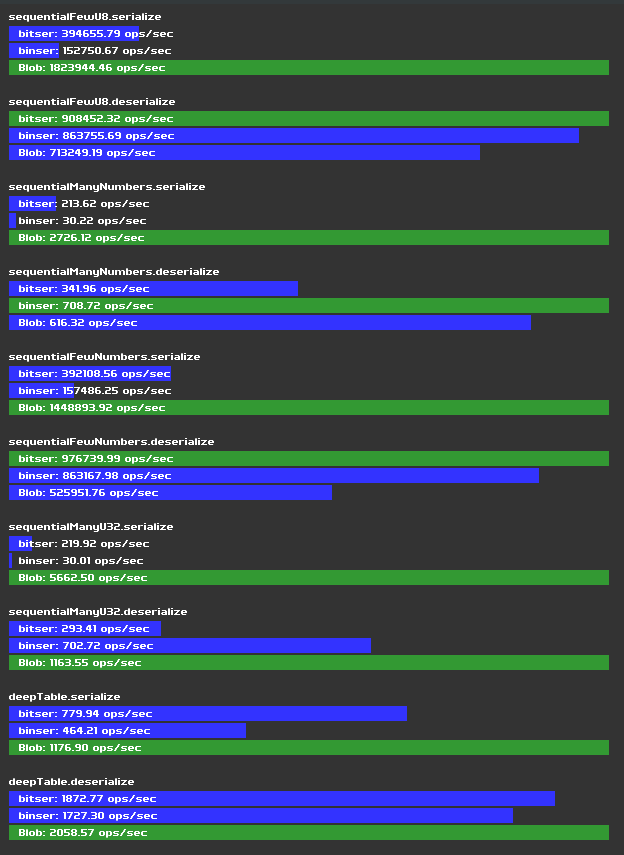

- It's much faster in general.

Performance in comparison to table.concat:

Code: Select all

local t1 = love.timer.getTime()

local blob = BlobWriter(nil, 2 ^ 20)

-- create a 10 MiB string of random bytes

for i = 1, 10 * 2 ^ 20 do

blob:u8(love.math.random(0, 255))

end

local str = blob:tostring()

local t2 = love.timer.getTime()

print(1000 * (t2 - t1), 'ms')

vs.

Code: Select all

local t1 = love.timer.getTime()

local tmp = {}

-- create a 10 MiB string of random bytes

for j = 1, 10 * 2 ^ 20 do

tmp[#tmp + 1] = string.char(love.math.random(0, 255))

end

local str = table.concat(tmp)

local t2 = love.timer.getTime()

print(1000 * (t2 - t1), 'ms')

table.concat: 2581.4122849988 ms

Performance comparison when not being limited by table.concat:

Code: Select all

local t1 = love.timer.getTime()

local blob = BlobWriter()

for j = 1, 1e6 do

blob:u32(j):u8(0)

end

local t2 = love.timer.getTime()

print(1000 * (t2 - t1), 'ms')

vs.

Code: Select all

local t1 = love.timer.getTime()

-- note: data is discarded here, while it's retained with BlobWriter

for j = 1, 1e6 do

love.data.pack('string', 'I4B', j, 0)

end

local t2 = love.timer.getTime()

print(1000 * (t2 - t1), 'ms')

love.data.pack: 281.65941900079 ms

In direct comparison, BlobWriter:pack is slower than love.data.pack.

Code: Select all

local t1 = love.timer.getTime()

local blob = BlobWriter()

for j = 1, 1e6 do

blob:pack('LB', j, 0)

end

local t2 = love.timer.getTime()

print(1000 * (t2 - t1), 'ms')

(vs. the

~282 ms of love.data.pack from above)

But you can of course combine love.data.pack with BlobWriter.

Note that the comparison is flawed, because it does not take the cost of buffer concatenation into account that is already 'built-in' with BlobWriter:pack. Also, BlobWriter:pack does not support all features of love.data.pack.